Ever since the release of Runway’s first video generation models, which really were a step above anything else at the time, many developers have tried to mimic their efforts. A slew of powerful closed source models have since entered the video generation scene online. These notably include Google’s Veo-3, OpenAI’s SORA, and Runway Gen-3, which are so powerful they have started to replace the need for stock footage at scale.

In the past few months, we have been fortunate enough to witness the rise of several, powerful open-source models that attempt to match the capabilities of their closed-source competitors. In this article, we will look at the three that have received the most attention: LTX-Video, HunyuanVideo, and Wan 2.1.

In this post we will cover:

- A comparison of the three models

- A walk-through on how to run them with the ComfyUI

Comparing the three video generation models

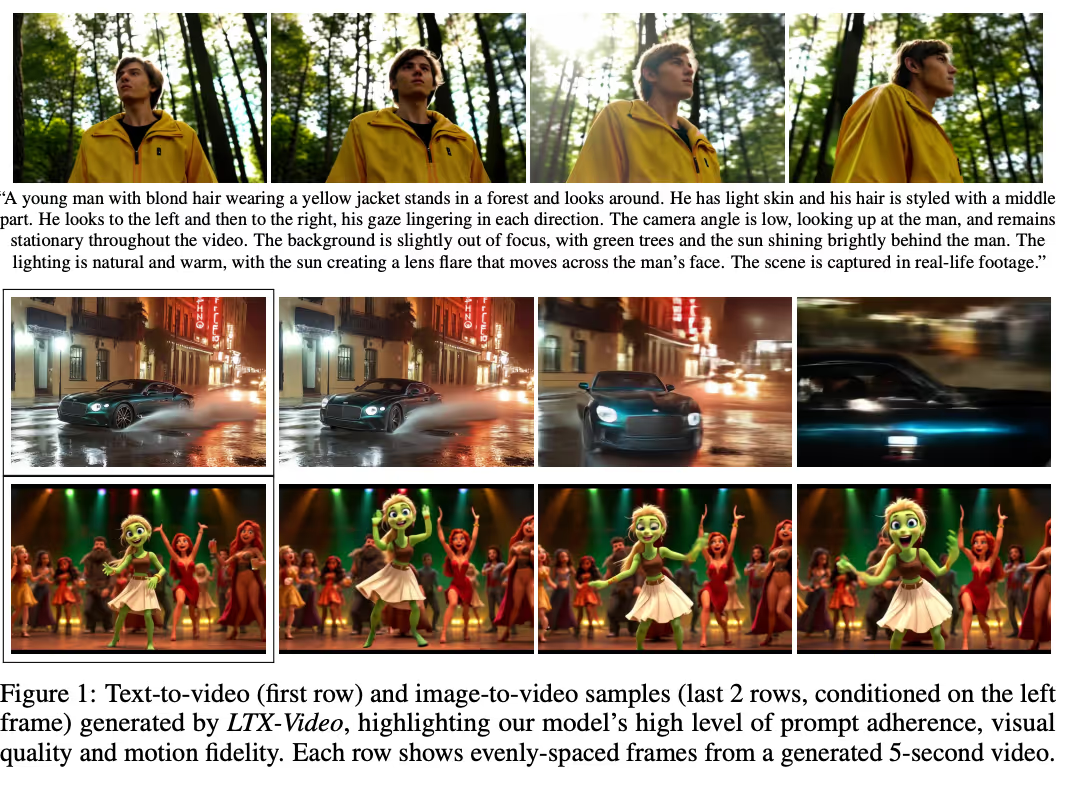

LTX-Video was the first of these diffusion transformer based models to release, and is by far the fastest and least computationally expensive to run. As a consequence, it is also arguably the least performative of the models we are comparing today. The model was trained on a large-scale dataset of diverse videos to generate high-resolution videos with realistic and diverse content. It can generate high-quality videos in near real-time, notably generating 24 FPS videos at 768x512 resolution at speeds faster than it takes to watch them on an NVIDIA H200.

LTX-Video is capable of both text to video and image to video generation. For several months it was the most performant image-to-video model available until the release of Wan 2.1 and HunyuanVideo’s image-to-video models. To learn more about LTX-Video, we recommend reading their Github page and paper.

HunyuanVide is an innovative open-source video foundation model that demonstrated performance in video generation comparable to, or even surpassing, that of leading closed-source models, according to their own testing. Upon release it quickly became more popular than LTX-Video, which was released earlier in the year. This popularity has only increased with the more recent release of their image-to-video models.

HunyuanVideo is a video generative model with over 13 billion parameters, making it the largest among all open-source models at the time of its release. The model boasts high visual quality, understanding of physical motion dynamics, text-video alignment capabilities to handle textual objects, and knowledge of advanced filming techniques. “According to evaluations by professionals, HunyuanVideo outperforms previous state-of-the-art models, including Runway Gen-3, Luma 1.6, and three top-performing Chinese video generative models.” Source

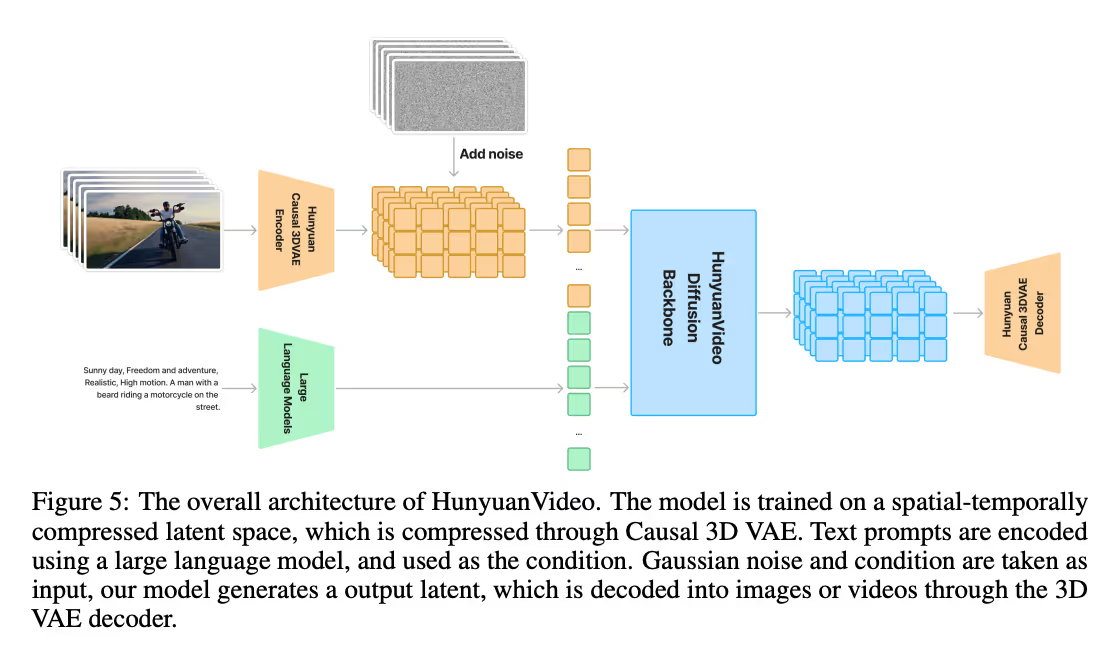

To achieve this, HunyuanVideo itself encompasses a comprehensive framework. This includes careful data curation, the development of an advanced architectural design (shown above), iterative and progressive model scaling and training, and an efficient infrastructure tailored for large-scale model training and inference. The innovative framework creates a powerful apparatus for video generation from scratch. While more computationally expensive than LTX-Video, it is significantly more powerful and accurate.

To learn more about HunyuanVideo, we recommend viewing their Github page and reading their paper.

The most recently released major open-source video generation model is Wan2.1. Wan2.1 is exciting because it consistently outperforms existing open-source models and state-of-the-art commercial solutions across multiple benchmarks. This includes consumer grade devices: the T2V-1.3B model requires only 8.19 GB VRAM, making it compatible with almost all consumer-grade GPUs.

Wan2.1 excels in text-to-video, image-to-video, video editing, text-to-image, and video-to-audio generation tasks. Additionally, Wan2.1 is the first video model capable of generating both Chinese and English text, allowing users to use the two languages to generate writing in video.

At the time of writing this article, the paper for Wan2.1 has not been publicly released, so we can only conjecture about how it achieves these capabilities. We believe it uses a similar architecture to HunyuanVideo and LTX-Video, with a diffusion transformer backbone and a causal 3d VAE. We do know that the Wan-VAE delivers “exceptional efficiency and performance, encoding and decoding 1080P videos of any length while preserving temporal information, making it an ideal foundation for video and image generation.”

To learn more about Wan2.1, we recommend viewing their Github page.

Given the performance and output quality we are going to focus our tutorial on Wan2.1.

Running the ComfyUI

To access and run Wan2.1 with White Fiber, we have created a quick guide to assist with the process. Users can read this guide, and expect to leave with a fully running version of the ComfyUI in their local browser that is run on the cloud H200 we provide you. Let’s begin.

- SSH into your machine

To access your machine, SSH in from your local terminal:

ssh root@<your ip address

- Clone the ComfyUI Repo

On your SSH terminal, clone the ComfyUI repo https://github.com/comfyanonymous/ComfyUI onto your directory of choice.

git clone https://github.com/comfyanonymous/ComfyUI

- Download the model files onto your machine

Next, we need to download all the necessary model files onto our machine. Paste the following script into your machine from the ComfyUI/ directory:

Wget -O models/diffusion_models/wan2.1_i2v_720p_14B_fp16.safetensors https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/main/split_files/diffusion_models/wan2.1_i2v_720p_14B_fp16.safetensors

Wget -O models/diffusion_models/wan2.1_t2v_14B_fp16.safetensors

https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/main/split_files/diffusion_models/wan2.1_t2v_14B_fp16.safetensors

Wget -O models/text_encoders/umt5_xxl_fp16.safetensors https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/main/split_files/text_encoders/umt5_xxl_fp16.safetensors

Wget -O models/vae/wan_2.1_vae.safetensors https://huggingface.co/Comfy-Org/Wan_2.1_ComfyUI_repackaged/resolve/main/split_files/vae/wan_2.1_vae.safetensors

This should download everything we need to run both the text-to-video and image-to-video workflows for the ComfyUI and Wan2.1.

- Install necessary packages

Running the ComfyUI requires first installing all the necessary python packages. Fortunately, they provide us with a requirements.txt file to use to install these packages. Run the following script to install all the needed packages.

pip install -r requirements.txt

- Run the ComfyUI

We are now ready to run the ComfyUI! Run the following script to launch the web GUI:

python main.py

This will output a localhost url for us. Save that value for later.

- Connect to local VS Code instance

In a previous tutorial, we outlined the process of accessing your machine with VSCode, which you will need to download onto your local machine if you have not already. Now that we have launched the ComfyUI, we need to connect to it to use it. To achieve this, we can use SSH hosting with VS Code.

Launch VS Code, and click the “Connect to…” button in the center console. This will open the dropdown at the top of the VS Code window. Click “Connect to host…”, and scroll to the bottom of the new dropdown and click “+ Add New SSH Host…”. This will prompt you to paste the same SSH code we used to access our machine, example ssh root@<your ip address. With that, we can now access our machine from VS Code at any time using the connect button.

Once we are in the connected window, we can access our ComfyUI window by pressing command + shift + p and finding the “Simple Browser”. Click in and paste the localhost URL from step 5. Click the arrow button on the right hand side of the URL bar. This will launch the web GUI in your local browser.

- Generate videos

Finally, we are ready to begin generating videos. Download the Wan 2.1 workflow of your choice, either image-to-video or text-to-video, from the official ComfyUI workflow examples. Use them to load the workflow onto your ComfyUI instance using the top navigation bar’s Workflow > Open > [your file].

.gif)

Once that’s ready, we can start generating! Generate the example video first to make sure everything is working, and start prompting. At the current settings, you should generate the videos in about a minute on an NVIDIA H200.

.avif)

.webp)

.png)