Introduction: A Rising Tide Raises All Ships

When OpenAI first announced its Strawberry model last fall, WhiteFiber CTO Tom San Filippo and I were thrilled. Here was a major innovation—a reasoning model capable of chain-of-thought processing—that we believed would spark a wave of excitement and competition in the open-source community. Our assumption was straightforward: more competition drives more innovation, which in turn drives more demand for compute. The entire industry would benefit from such a rising tide, ushering in more powerful open-source models and more opportunities for compute-intensive applications.

Enter DeepSeek R1: A Game-Changer?

On January 20, DeepSeek announced the release of DeepSeek R1, the first open-sourced reasoning model. Early benchmarks suggest R1 performs as well or better than OpenAI’s o1 model—an impressive feat by itself. What really turned heads, however, was DeepSeek’s claim on Jan 27th, that R1 was trained on just 2,048 H800 GPUs for a period of two months. If true, this represents a massive cost reduction compared to previous estimates for training similarly capable large-scale models.

- Potential Training Cost Saving: By some projections, training a top-tier reasoning model can range anywhere from $10 million to $100 million depending on GPU type, availability, and training duration. DeepSeek’s claim suggests a possible 90–95% reduction in cost.

- Market Reaction: Instead of celebrating this efficiency breakthrough, the market panicked. NVIDIA reportedly lost $600 billion in market capitalization in a single day, and other chip makers—Broadcom, TSMC, and more—faced major sell-offs.

At first glance, this reaction seemed counterintuitive. Shouldn’t more efficient training spur demand for further development and thus drive greater demand for GPUs? Many in the ML/AI and HPC community (myself included) were stunned by the negative response. In a group chat with friends that are in the industry, one person texted "Nvidia is on sale today! Just use promo code: DEEPSEEK", a tongue and cheek way of saying that it was a great time to buy the dip.

Be Skeptical

Are DeepSeek’s GPU Claims Accurate?

DeepSeek’s numbers invite scrutiny. ScaleAI CEO Alexandr Wang has suggested that DeepSeek has 50,000 H100 GPUs at its disposal, but that it’s a dirty little secret that no one can talk about due to export controls. Other sources put that figure at at least 20,000 H100s. The big question: could they really have pulled off this feat with just 2,048 H800s?

Transfer Learning Changes the Equation

DeepSeek’s whitepaper clarifies that R1’s training pipeline leveraged transfer learning from their proprietary DeepSeek V3 model and the open-source Llama 3.3 70B architecture. Transfer learning can drastically reduce the final training run’s compute requirements and time-to-market—but that doesn’t negate the enormous cost and resources poured into developing those foundational models in the first place.

- Holistic Cost Analysis: The cumulative investment includes initial pre-training, fine-tuning, and the ongoing infrastructure costs for both DeepSeek V3 and Llama 3.3. Looking at the final phase alone can be misleading if we ignore the resources consumed along the way.

Innovation Under Constraints

For the sake of argument, let’s take DeepSeek at face value and assume they truly needed only 2,048 H800s for the final R1 training. According to their whitepaper, they achieved this efficiency partly by optimizing PXT, an NVIDIA GPU orchestration layer beneath CUDA. This is especially noteworthy given export restrictions on cutting-edge chips like H100s to China.

More Juice from a Smaller Orange

Because DeepSeek doesn’t have unlimited access to the most powerful GPUs at scale, they were forced to squeeze more performance out of what they could access—an H800, which is roughly comparable to but still less capable than the H100. If these innovations succeed in pushing efficiency boundaries, then organizations not bound by those same hardware restrictions could theoretically use the same techniques on even more powerful hardware.

- Key Insight: No machine learning engineer has ever said, “Now that we’re more efficient, let’s use fewer GPUs.” Efficiency improvements typically lead to larger and more complex models, which in turn drives more demand for compute.

Closed Source vs. Open Source: Implications for Everyone

While it may seem obvious, it’s critical to remember:

- Closed Source Models (OpenAI, Anthropic, Cohere, etc.)

Benefits primarily flow to the model creator and the cloud provider running those workloads. - Open Source Models (like DeepSeek R1)

Benefits extend to everyone—startups, individual developers, enterprises, and an entire ecosystem of service providers.

This is reminiscent of an “Empire vs. Rebels” dynamic. Hyperscalers and “Neocloud” providers (e.g., Lambda Labs, Nebius) often host open-source model development and deployment. Before R1, these platforms were sometimes jokingly called “Llama factories” due to the demand for hosting, fine-tuning, and inference on Llama-based models. Now, any startup or enterprise can take advantage of R1’s reasoning capabilities.

Jevons Paradox: Why Efficiency Spurs Demand

Lower Cost, Higher Demand

If R1 truly lowers the compute requirements and cost to deploy a cutting-edge reasoning model, it immediately opens up previously cost-prohibitive use cases. Many AI applications—especially those requiring complex reasoning or large-scale inference—are shelved mainly because they don’t pencil out financially.

- Jevons Paradox

Historically applied to energy consumption, Jevons Paradox explains that improved efficiency can actually increase demand. If training and inference become cheaper, more people and organizations will leverage AI—thus increasing overall GPU consumption.

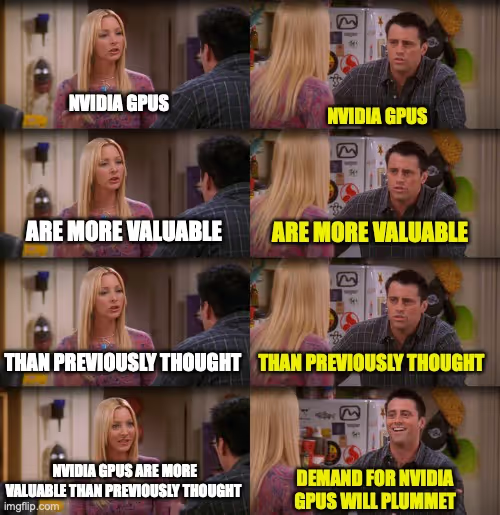

Market Overreaction?

The market’s knee-jerk response to the DeepSeek announcement may have been rooted in fears that GPU demand would plummet if top-tier models become drastically cheaper to train. However, history suggests the opposite: more accessible technology generally leads to greater adoption and spurs more investment in supporting infrastructure.

One Month Later: Surging Demand for H200 GPUs

It’s now been almost a month since DeepSeek R1’s release. As many of us predicted, there has been a massive increase in demand for H200 GPUs, which were already in short supply. This aligns with the broader pattern seen in AI computing: more efficient models don’t dampen demand; they catalyze it.

- Case in Point: Reports from hardware suppliers and cloud providers indicate increased lead times for new GPU orders, further supporting the argument that any efficiency gains in training large models ultimately drive usage upward.

Conclusion: A Paradigm Shift, Not a Zero-Sum Game

DeepSeek R1 marks a critical milestone in open-source AI innovation. Whether or not DeepSeek’s claims about GPU usage are entirely accurate, the broader implications are clear:

- Open Source Triumphs: Accessible models accelerate AI adoption across industries, driving demand for more compute, not less.

- Market Dynamics Are Complex: Investors may fear short-term disruptions, but historically, increased efficiency spurs additional growth rather than curtailing it.

- Future of HPC: As the boundaries of efficiency are pushed further, the appetite for large-scale compute will continue to grow—reinforcing the idea that more efficient AI models ultimately need more hardware to push the envelope

In a world where reasoning models like DeepSeek R1 become increasingly accessible, the real winners are those who can adapt quickly—innovators in training methodologies, GPU manufacturers with the next generation of chips, and the myriad companies deploying AI solutions at scale. Far from a zero-sum game, this efficiency revolution in AI promises an expanding ecosystem, bigger models, and ultimately broader market opportunities.

References & Supporting Data

- NVIDIA GPU Roadmap: NVIDIA Architecture Roadmaps

- Jevons Paradox: Jevons, W. S. (1865). The Coal Question. (Famous exposition on how increased efficiency in coal usage led to greater consumption.)

- Open Source AI Adoption Stats: Recent industry surveys (e.g., State of AI Report) highlight how rapidly open-source LLM usage is growing, driving significant cloud GPU consumption.

- Estimated Training Costs: “AI and Compute” analyses from OpenAI’s blog (2018) and subsequent publications indicate exponential growth in training compute over the past decade.

With these insights, it’s evident that efficiency and innovation tend to go hand in hand with increased compute demand. The DeepSeek R1 story, whether exactly as advertised or not, underscores how open-source breakthroughs can rapidly reshape market dynamics—and likely push the boundaries of AI even further.

.png)