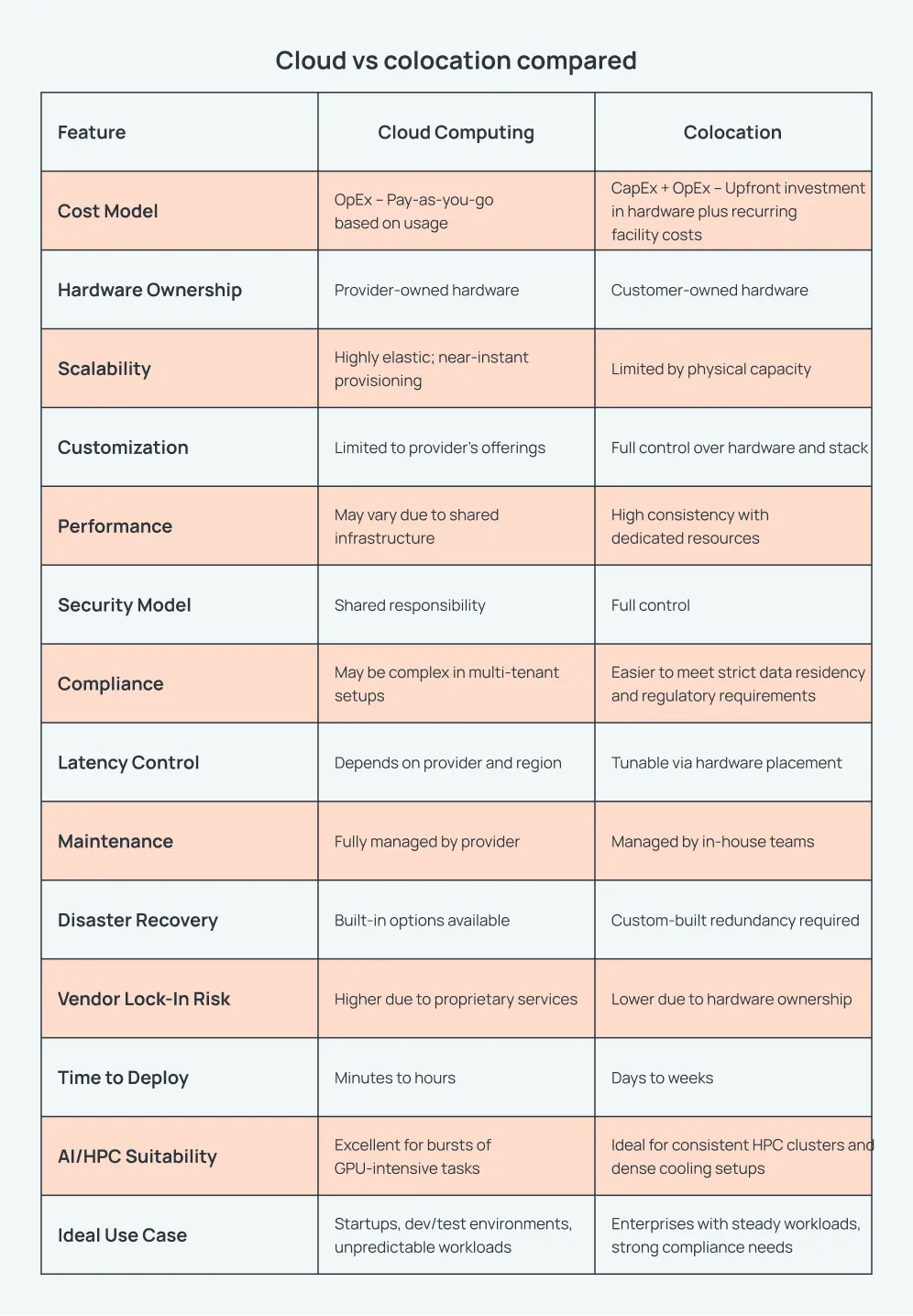

Choosing the right infrastructure for compute-intensive workloads like training large models, running inference at scale, and supporting high-performance computing is a mission-critical business decision.

Understanding their tradeoffs—and how to blend their strengths—is key to building resilient, cost-effective, high-performing AI infrastructure.

DEFINING THE FUNDAMENTALS

What is cloud computing?

Cloud computing delivers computing services—like servers, storage, and GPUs—over the internet from a public or private cloud provider. It’s highly scalable, fast to deploy, and often used for elastic workloads where flexibility is a top priority.

Cloud services are typically consumed on a pay-as-you-go model (OpEx), and the provider manages the underlying hardware and data center operations. This makes it an attractive option for startups and teams looking to move fast without capital investment.

What is colocation?

Colocation (or "colo") refers to renting physical space, power, and network connectivity in a third-party data center where you deploy your own hardware. While the data center provider handles the facility, power, cooling, and physical security, you own and manage your servers and infrastructure stack.

Colocation shifts control and ownership back to the customer—ideal for companies that want to optimize cost, customize their stack for specialized AI/HPC workloads, or enforce strict compliance and data sovereignty.

Controls and customization

With colocation, you gain full control of your infrastructure. Want to use custom-built GPU servers with liquid cooling? Run bare-metal Kubernetes optimized for large language model training? You’re free to build exactly what your AI workloads need.

In contrast, cloud providers limit customization to what they offer which is ideal for speed, but often suboptimal for performance tuning or cost optimization at scale. For advanced AI/ML pipelines, colocation often unlocks deeper efficiency.

Scalability and flexibility

Cloud’s core value is agility. Spinning up 100+ GPUs in minutes is possible with the right cloud quota, but costs grow exponentially with scale and duration. Colocation scales differently: more slowly, but with long-term efficiency and fixed pricing.

Hybrid cloud-colo strategies offer flexibility where you can use cloud for spikes in demand, and colocation for baseline workloads.

Performance and reliability

AI workloads demand consistent high performance. With cloud, noisy neighbors in multi-tenant environments can affect throughput. Colocation avoids this with dedicated hardware, direct interconnects, and the ability to fine-tune thermal and power environments.

For example, at WhiteFiber, our AI + HPC colocation sites feature high-density racks and GPU-specific airflow zoning to support critical workloads that can't afford variance.

SPECIFIC CONSIDERATIONS FOR AI AND HPC WORKLOADS

Hardware acceleration and specialization

Training deep learning models often requires access to specialized hardware or even custom accelerators. Cloud may offer access to these—but at a premium, and often with limited availability.

With colocation, you can deploy purpose-built systems tailored to your model architecture.

Data security and sovereignty

AI workloads often involve sensitive data—from medical images to financial records. With colocation, your data remains in systems you physically control, easing concerns over residency, regulatory compliance (e.g., HIPAA, GDPR), and third-party access.

HYBRID COLOCATION AND CLOUD: THE BEST OF BOTH WORLDS

Hybrid architecture models

Hybrid infrastructure lets you split workloads between cloud and colo for maximum efficiency:

This approach lowers cost, reduces cloud dependency, and improves performance for AI training and inferencing.

Integrated solutions

WhiteFiber offers direct private connectivity to the cloud from our facilities—ideal for building fast, secure hybrid topologies without internet latency or egress fees.

INFRASTRUCTURE EVALUATION CHECKLIST

To make an informed decision between colocation, cloud, or hybrid, evaluate the following:

Choosing a provider

- Do they offer GPU-optimized environments?

- Can they support high-density cooling and power needs?

- Is low-latency cloud interconnect available?

- Is their facility Tier III or IV rated?

- What SLAs and remote hands services are offered?

Workload characteristics

- Are your compute needs predictable or bursty?

- Do your models require large memory footprints or inter-GPU bandwidth?

- Will you need specific GPU types or architectures?

Costs

- Are you optimizing for speed (cloud) or long-term cost control (colocation)?

- Will your OpEx-based cloud spend scale linearly or explode as workloads grow?

- Can CapEx for hardware provide ROI over 12-24 months?

Control and compliance requirements

- Do you need root access, air-gapped systems, or custom firmware?

- Are you working in regulated industries or jurisdictions with strict data localization laws?

MAKE AI INFRASTRUCTURE YOUR COMPETITIVE ADVANTAGE

Whether you’re training frontier models, deploying real-time inference systems, or running hybrid pipelines, your infrastructure choices directly impact your velocity, compliance, and margin.

WhiteFiber helps enterprises build AI infrastructure that outperforms the cloud in cost, performance, and control—without sacrificing flexibility. With hybrid-ready connectivity, best-in-class network performance, and expert technical support, we enable you to turn infrastructure into a strategic advantage.

Contact our team to explore a custom colocation or hybrid deployment designed for your unique needs.

FAQ

Q: What is the difference between cloud computing and colocation?

Q: What is the difference between data center and cloud?

Q: Is colocation cheaper than cloud?

Q: What is the difference between colocation and hosting?

.webp)

.webp)

.png)