So, you had a great idea for how to use AI and now you’re turning that idea into reality. You’ve got a killer use case, a large data set, and now it’s time to get to training. The next question to answer is what kind of hardware do you need in order to train, fine-tune, and deploy your great idea.

You know you need powerful hardware, but there are many options to choose from. At one level, it’s simple. Newer more powerful GPUs will be faster. They are also more expensive and harder to get access to. This view also doesn’t take into account a number of other variables such as - memory, architecture differences, and suitability for long-context models - that when factored in may dramatically (or not) affect your choice.

This guide is intended to help AI teams choose the best infrastructure for training, fine-tuning, and running advanced LLMs. We'll cover the variables mentioned above as well as the particular strengths of the most popular GPUs available today.

NVIDIA’s Latest GPU Lineup

.avif)

NVIDIA H100 (Hopper)

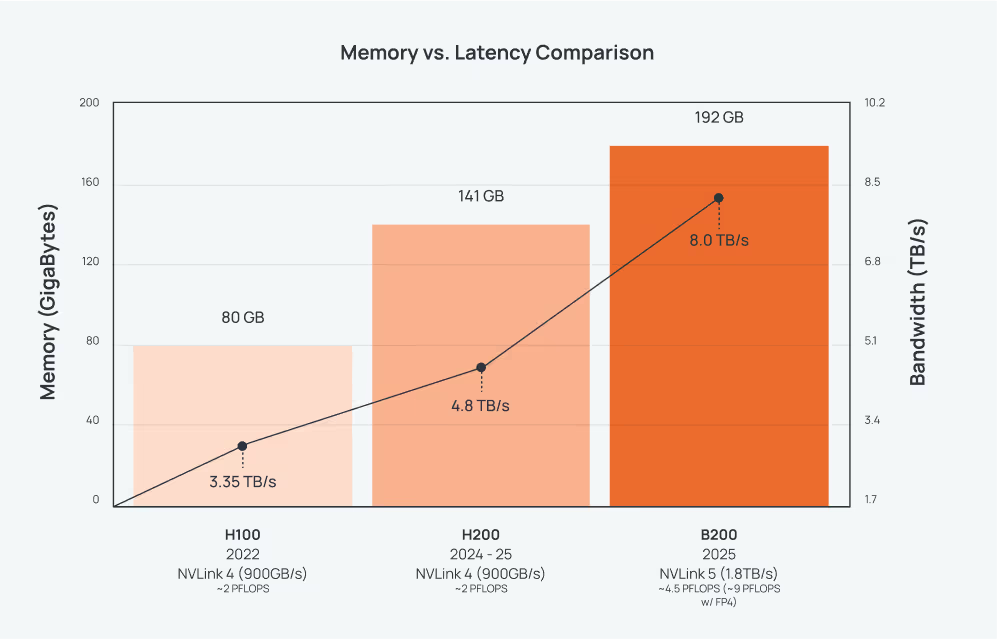

Released in 2022, H100 GPUs significantly improved LLM training with:

- 80GB HBM3 memory (3.35 TB/s bandwidth)

- FP8 precision support, delivering ~2 PFLOPS compute

- Great performance improvements over earlier GPUs (like A100)

H100 quickly became a reliable choice for many AI workloads.

NVIDIA H200 (Enhanced Hopper)

Launched in 2024 - 25, H200 keeps the Hopper architecture but increases memory:

- 141GB HBM3e memory (~4.8 TB/s bandwidth)

- Similar FP8 compute throughput as H100 (~2 PFLOPS)

- Better suited to memory-intensive models or longer contexts

H200 improves throughput (up to 1.6× faster than H100) by fitting larger batches into memory, making training and inference smoother.

NVIDIA B200 (Blackwell)

The new flagship (2025), B200 introduces major upgrades:

- 192GB HBM3e memory (dual-die, ~8.0 TB/s bandwidth)

- Massive compute (~4.5 PFLOPS FP8 dense, up to ~9 PFLOPS with FP4)

- Dual-chip architecture, appearing as a single GPU

- Next-gen Transformer Engine supporting FP4 precision for inference

- 5th-gen NVLink (1.8 TB/s), improving multi-GPU scalability

The B200 significantly speeds training (up to ~4× H100) and inference (up to 30× H100), perfect for the largest models and extreme contexts.

Quick comparison

Key Considerations for Choosing GPUs

Memory Capacity & Bandwidth

LLMs with long contexts (like DeepSeek-R1’s 128K tokens) heavily rely on GPU memory.

Compute Performance & Precision (FP8 to FP4)

Compute performance determines training speed.

Architectural Differences: Hopper vs. Blackwell

Architectural features influence scaling and usability:

- Monolithic (H100/H200) vs. Dual-chip (B200):

B200 provides more compute and memory, simplifying scaling for extremely large models. - NVLink Connectivity:

B200’s NVLink 5 (1.8 TB/s) doubles H100’s connectivity, improving multi-GPU training efficiency dramatically. - Other enhancements in B200:

Hardware reliability (RAS Engine), secure AI computing, and data decompression hardware - making it ideal for enterprise-scale deployments.

Suitability by Workload

Pre-training Large Models (100B+ parameters)

Fine-tuning and Reinforcement Learning (RLHF)

Long-context Inference and RAG

Performance Benchmarks (Real-World Estimates)

- H100: Widely available, prices dropping due to maturity.

- H200: Slightly more expensive than H100 (10 - 20%), availability ramping up.

- B200: High premium initially (25%+ over H200), limited availability early in 2025 but exceptional long-term performance and efficiency.

Pricing & Availability

Cloud vs. On-Premise

- Cloud: Offers immediate, flexible access (AWS, Azure, Google). Ideal for rapid prototyping and scaling.

- On-Prem: High initial investment but can be more cost-effective and controlled at scale, especially with B200’s energy efficiency.

Planning Your GPU Infrastructure (Next 6 - 12 Months)

Conclusion

Choosing between NVIDIA’s H100, H200, and B200 GPUs depends on your specific LLM training and inference needs:

Aligning GPU selection with model complexity, context length, and scaling goals, will help you get your project to market efficiently and enable scaling over time as users all over the world fall in love with and come to depend on the outcome of your big idea.

.png)